It is time to examine low performance in learning assessments

Meeting the monitoring purpose of ensuring that no one is left behind in learning depends on the ability to differentiate degrees of low performance. If an assessment is too difficult, some learners will not be able to answer any question correctly. Scores then suffer from a ‘floor effect’, with too many students scoring zero. When, for instance, 40% of learners in a country score zero, it would be helpful to know whether there are variations at this very low performance level. Without the ability to distinguish levels and trends among the lowest performers, it is difficult to tell whether interventions aimed at them work.

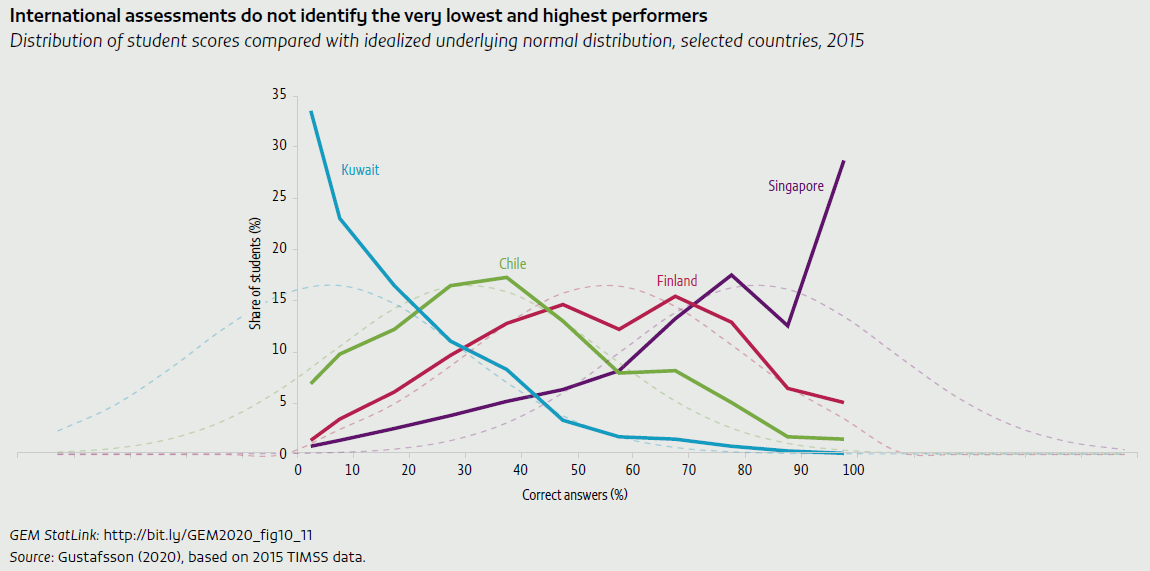

The challenge is particularly obvious in international assessments calibrated to a common scale rather than geared towards the range of proficiency among acountry’s learners. For instance, among countries that took part in the 2015 IEA Trends in International Mathematics and Science Study (TIMSS), many students in Kuwait ran up against the scale floor, while in Singapore, they were limited by the scale ceiling. This makes the gap between the countries appear smaller than it is.

Even national assessments built on assumptions around grade-level competences may be poorly targeted. In many developing countries, skills specified in the curriculum tend to be well above what students in that grade actually learn (Pritchett and Beatty, 2012). A test focused only on specified competences is likely to be too difficult for many students.

Item response theory (IRT) is one way to ensure assessments better differentiate among students at the low end of achievement. IRT scores take into account the difficulty of each item. If two students answer the same number of questions correctly, but one student correctly answers more difficult questions, that student receives a higher IRT score. Capacity for IRT scoring is weak in many countries, but investing in such capacity has several benefits, including more informative results regarding low-performing schools and students. IRT scoring can also be used to refine each student’s score, using individual background data to predict variation across students with a raw score of zero (Martin et al., 2016).

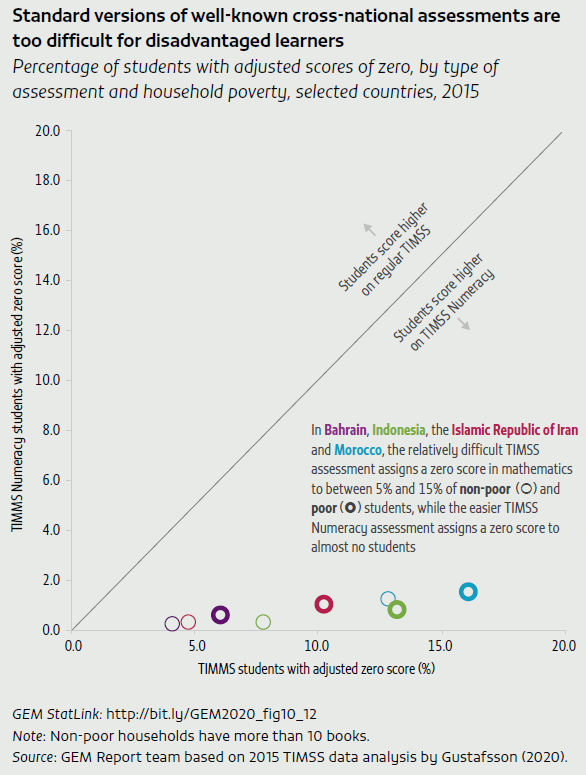

A recent study simulated how much more reliable PISA results for specific countries would be if test items were easier. It finds that there are good reasons for low- and middle-income countries to use PISA or TIMSS variants that are easier than the tests administered in high-income countries (Rutkowski et al., 2019). Since the 2015 TIMSS, some participating countries have tested grade 4 students using either the regular TIMSS or a new, less demanding TIMSS Numeracy assessment intended to counteract floor effects. In 2015, grade 4 students in Bahrain, Indonesia, the Islamic Republic of Iran, Kuwait and Morocco were randomly assigned to take either test. In terms of IRT scores calculated by the IEA, differences between the regular TIMSS and TIMSS Numeracy were barely noticeable. In other words, IRT scores from the regular TIMSS are fairly successful at differentiating students even at the low end, in part due to the imputations mentioned above (Gustafsson, 2020).

Relying on tests set at too high a level of difficulty is nevertheless problematic because the comparison of IRT scores does not account for random guessing for multiple choice. Floor effects may come into play even before scores hit zero. With multiple choice questions, in particular, what is informative about a learner’s knowledge is not the raw number of correct answers. It is how much better they did than would be expected with random guessing. This number can be estimated, including for a mix of multiple choice questions and items requiring learners to construct responses (Burton, 2001).

For example, 34% of students in Kuwait scored zero on 12 constructed response questions, while 3% scored zero on 15 multiple choice questions. When the results are adjusted for random guessing, it appears likely that the achievement of around 25% of students was actually too low for estimation on the multiple choice part (Gustafsson, 2020). Largely as a result of the introduction of TIMSS Numeracy, the number of countries considered by the IEA to suffer from reliability problems due to floor effects declined, from five in 2011 to two in 2015 (Mullis et al., 2016). While this is true at the average level, variation emerged by socio-economic status, as defined by the number of books in the household. Among less disadvantaged students, after adjusting for random guessing, many fewer had an effective zero score on TIMSS Numeracy than on the regular TIMSS.

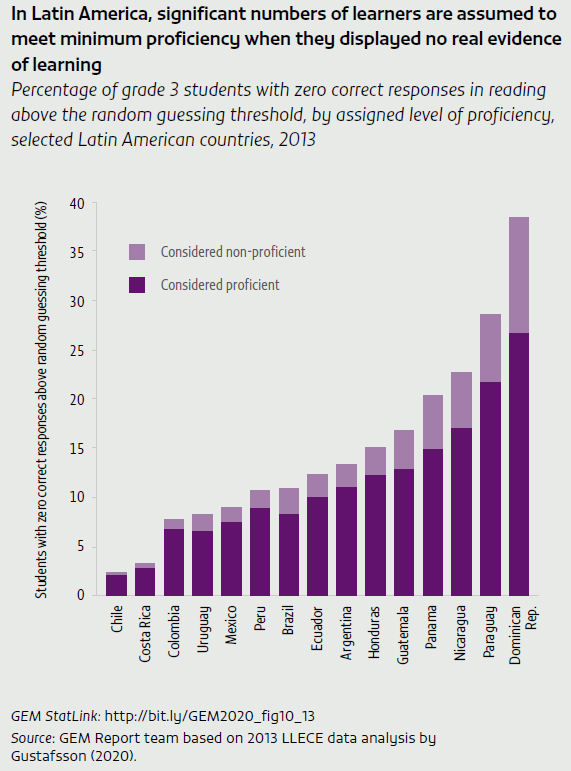

The regional assessments organized by LLECE in Latin America (whose third round in 2013 was commonly known as TERCE, its fourth in 2019 as ERCE) suffer particularly serious floor effects. In every country, in grade 3 and 6 reading and mathematics, the percentage of students with zero scores exceeds the percentage of students officially reported as below the minimum proficiency level.

For grade 3 mathematics in TERCE, learners who had raw scores indistinguishable from random guessing but who were nevertheless considered proficient can be identified. Likewise, three-quarters of students who did no better on multiple choice questions than random guessing were considered proficient in reading. These students may have higher IRT scores than those in the bottom group (considered below minimum proficiency level), but after controlling for random guessing, there is insufficient information on students from both groups to say much about what they can and cannot do. In other words, LLECE assessments do not include enough easy items to produce meaningful information about the most marginalized students (Gustafsson, 2020).

Comparing the magnitude of floor effects against average performance across large-scale assessments yields both good and bad news. Some assessments are clearly too difficult for average learners in some countries, especially in mathematics, with up to 37% of students failing to score above the random guessing threshold on regional assessments in Latin America and southern and eastern Africa (Gustafsson, 2020).

The good news is that calibrating difficulty so average students can answer at least half the questions correctly generally seems to allow the vast majority of students to display measurable performance and limit the floor effect to, at most, 10%. However, the comparison also shows that the assessments that largely manage to reduce floor effects to acceptable levels have fewer multiple choice and more constructed response items. While the very large floor effects seen in some countries can be eliminated, completely eliminating them would involve substantially different approaches to testing that are costly and more complex to develop, score and compare across countries.

Focuses published in Learning

It is time to examine low performance in learning assessments

Learning does not progress in a linear fashion

Climate change education aims to equip populations to cope with and mitigiate the effects of climate change

Basic numeracy skills have stagnated among Africa's poorest for decades

Computational thinking is an important component of digital literacy

(How) do writing tools and techniques matter?

Does reading speed matter?

Mathematics anxiety negatively affects mathematics performance

Can leadership be taught?

Civic education can shape young citizens’ political behaviour

References

The full list of references can be found at this link.